What is TensorFlow?

Tensorflow is a machine learning library that lets you create neural networks. A neural network can recognise patterns in complex data. In this tutorial I want to create a simple classification network, the goal is to get to grips with the terminology, pitfalls and basic workflow of tensorflowJS.

Neural Networks

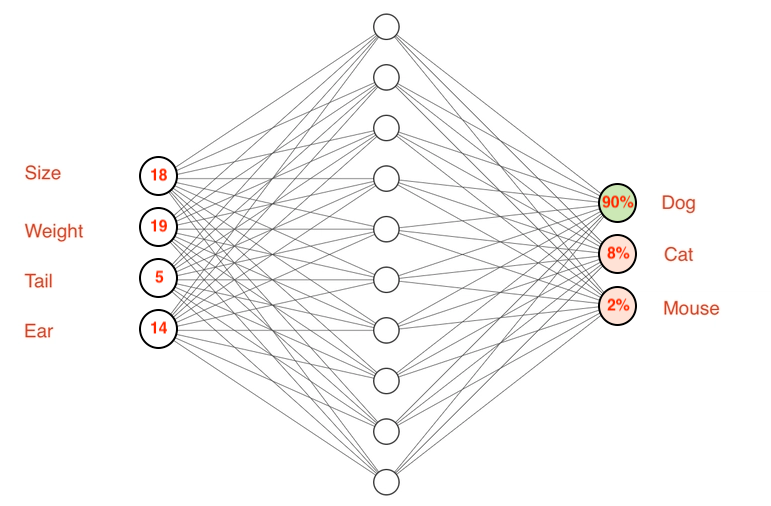

A Neural Network is a collection of inputs and outputs that are all connected to each other. The idea is that this network can learn from data that it is presented with. In this tutorial we use animal features (size, weight, tail length and ear length) to teach the network to recognize dogs, cats and mice.

- This animation shows how a single-cell brain learns that cats have claws, but dogs don't.

- You can build your own neural network in the TensorFlow playground

Data

Today's goal is to create a classification network that can learn to recognise dogs, cats and mice by looking at their features: size, weight, tail length, and ear size.

Take a look at this demo dataset. We have 12 animals, each with features and a label.

const data = [

[[18, 19, 5, 14], "dog"],

[[17, 18, 4, 13], "dog"],

[[19, 10, 6, 15], "dog"],

[[16, 17, 3, 14], "dog"],

[[3, 4, 8, 7], "cat"],

[[4, 3, 9, 6], "cat"],

[[3, 5, 7, 8], "cat"],

[[4, 4, 8, 7], "cat"],

[[0.5, 0.5, 2, 1], "mouse"],

[[0.3, 0.3, 1, 1], "mouse"],

[[0.7, 0.8, 3, 2], "mouse"],

[[0.6, 0.4, 2, 1], "mouse"]

];- Since our animals have four features (size, weight, tail, ears) our network has four input cells.

- Since our dataset contains three animals, the network output has three output cells.

- In between there are hidden layers. In this example we put one hidden layer with 10 cells.

Let's start coding!

In this example I set up a frontend Vite project with VanillaJS. The frontend will give us some nice visualisations.

npm create vite@latest

cd _your_project_name_

npm install @tensorflow/tfjs

npm install @tensorflow/tfjs-visFrom now on, we can use tensorflow in our JS files!

import * as tf from '@tensorflow/tfjs';

import * as tfvis from '@tensorflow/tfjs-vis';Creating the neural network

We can grab a code example for classification from the tensorflowjs website.

By using classificationModel.add() we can add layers to the network.

const classificationModel = tf.sequential();

classificationModel.add(tf.layers.dense({ units: 10, inputShape: [4], activation: 'relu' })); // hidden layer with input layer

classificationModel.add(tf.layers.dense({ units: 3, activation: 'softmax' })); // cat, dog, mouse

classificationModel.compile({

loss: 'categoricalCrossentropy',

optimizer: 'adam',

metrics: ['accuracy']

});- The first input layer does not have to be added separately. Instead, the first input layer (with 4 cells) is a property of the first hidden layer (with 10 cells).

- Then, we add the output layer with 3 cells.

- The

relu activationis a function that decides how important a cell is in the network - The

softmax activationdetermines how the final result should be shown in the 3 output cells. In this case, we will get a nice percentage from 0 to 1. All three outputs together will be 100%

Training

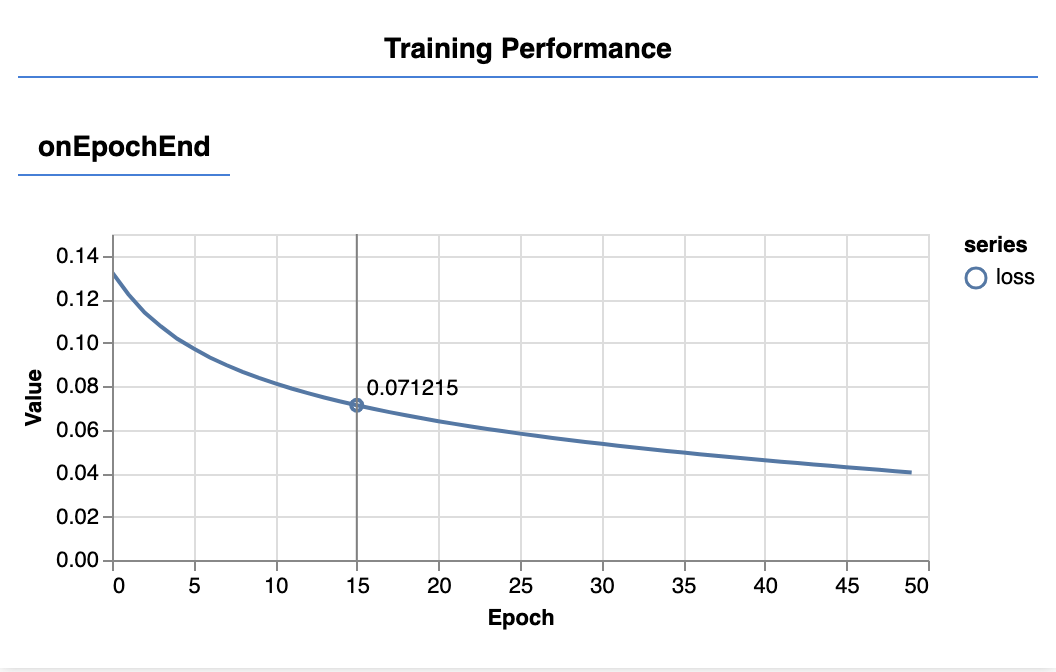

Training the model can be done with one line of code. We use 50 epochs to improve the model. In one epoch, the model attempts to improve itself to make better predictions. More epochs improve the accuracy.

await classificationModel.fit(trainingData, labels, { epochs: 50 });⚠️ The fit function expects separate trainingdata and labels though! That means we have to split our data into two arrays:

const data = [[18, 19, 5, 14], [...], [...], ...etc]

const labels = ["dog", "dog", "dog", "dog", "cat", ...etc]Tensors

For the fit function to work, the data and label arrays need to be tensorflow tensors. This allows the calculations to run on the GPU of your machine. But this presents us with another problem: a tensor consists of numbers, but our labels are strings...

For classification tasks, the method for converting text labels into tensors that work for prediction is called one-hot-encoding. That means the labels become associated with one of the three output cells of the neural network.

If you look at the illustration above, we could say that:

dogis[1,0,0]catis[0,1,0]mouseis[0,0,1]

We will now convert all our data manually so you can see what's going on:

const trainingData = tf.tensor2d([

[8, 9, 5, 4], // large dog

[7, 8, 4, 3], // medium dog

[9, 10, 6, 5], // big dog

[6, 7, 3, 4], // smaller dog

[3, 4, 8, 7], // cat

[4, 3, 9, 6], // cat

[3, 5, 7, 8], // cat

[4, 4, 8, 7], // cat

[0.5, 0.5, 2, 1], // mouse

[0.3, 0.3, 1, 1], // tiny mouse

[0.7, 0.8, 3, 2], // mouse

[0.6, 0.4, 2, 1] // mouse

]);

const labels = tf.tensor2d([

[1, 0, 0], // dog

[1, 0, 0], // dog

[1, 0, 0], // dog

[1, 0, 0], // dog

[0, 1, 0], // cat

[0, 1, 0], // cat

[0, 1, 0], // cat

[0, 1, 0], // cat

[0, 0, 1], // mouse

[0, 0, 1], // mouse

[0, 0, 1], // mouse

[0, 0, 1] // mouse

]);Now our data should work with the fit function! Try it out!

Predicting

Once your fit function finished running (this is an asynchronous process), you can start making predictions on your model.

This means that we feed 4 numbers into 4 input cells of the network, and then we check what the values are of the 3 output cells of the network!

// Predict an animal with features 4,5,3,4

const testData = tf.tensor2d([[4, 5, 3, 4]]);

const prediction = classificationModel.predict(testData);

console.log('Prediction probabilities:');

prediction.print();This will output a tensor that looks like this. These are the values of the last 3 cells in the neural network!

[[0.2227311, 0.6748303, 0.1024387],]Since we decided that the 3 output cells stand for [dog, cat, mouse], you can read the prediction as follows:

- 22% chance of "dog"

- 67% chance of "cat"

- 10% chance of "mouse"

So the animal is most likely a cat 🐈! Congratulations! You have now got the bare basics of TensorFlowJS working!

Showing the actual label

It would be nicer to show the actual predicted label in the console. To do that, we first need to get the tensor data back from the GPU into a normal javascript variable. This is done using tensor.dataSync().

const predictedClass = prediction.argMax(1).dataSync()[0];This gives the index of the highest value, so 0, 1 or 2. We use the index to get the right label:

const classNames = ['dog', 'cat', 'mouse'];

const className = classNames[predictedClass];

console.log(`I think it's a: ${className}`);Visualising training

In our current code we have no idea how many epochs we need, or if our one hidden layer is enough to run the fit function. We can visualise the training with the Tensorflow Visor Library

Code

await classificationModel.fit(trainingData, labels, {

epochs: 250,

callbacks: tfvis.show.fitCallbacks({ name: 'Training' }, ['loss'], {

callbacks: ['onBatchEnd']

})

});

tfvis.show.modelSummary({ name: 'Model' }, classificationModel);This example shows the loss function during training. This number should converge towards 0.0 during training. It will never reach 0 though! Try to get it to reach between 0.2 and 0.4 for this exercise, by adjusting the amount of epochs.

Putting it all together

We should organise our code a bit nicer in functions.

We also have to dispose of created tensor objects! This can be done using dispose() and tf.tidy().

let classificationModel

async function createModel() {

// create a classification model

classificationModel = tf.sequential();

classificationModel.add(tf.layers.dense({ units: 10, inputShape: [4], activation: 'relu' }));

classificationModel.add(tf.layers.dense({ units: 3, activation: 'softmax' }));

classificationModel.compile({

loss: 'categoricalCrossentropy',

optimizer: 'adam',

metrics: ['accuracy']

});

// train the model

await classificationModel.fit(trainingData, labels, {

epochs: 250,

callbacks: tfvis.show.fitCallbacks({ name: 'Training' }, ['loss'], {

callbacks: ['onBatchEnd']

})

});

tfvis.show.modelSummary({ name: 'Model' }, classificationModel);

// clear the training data tensors from memory

trainingData.dispose();

labels.dispose();

}

// predict an animal. use tf.tidy to remove the temporary tensors right away

function predict(animal) {

return tf.tidy(() => {

const testData = tf.tensor2d([animal]);

const prediction = classificationModel.predict(testData);

console.log('Prediction probabilities:');

prediction.print();

const predictedClass = prediction.argMax(1).dataSync()[0];

const classNames = ['dog', 'cat', 'mouse'];

return classNames[predictedClass];

});

}

await createModel()

let result = predict([5, 6, 7, 6])

console.log(`I think it's a ${result}`);🤩 That's it! The code is still pretty concise if you consider everything that's happening under the hood!

Tutorial part 2

In the next tutorial we will look at some necessary improvements:

- We should create a function that converts the training labels automatically into the "one-hot-encoding" format

- Data should be normalized before training, otherwise the model will incorrectly assume that a feature with a bigger range is more important than a feature with lower range.

- We should create test data and an

evaluatefunction to test if our model actually predicts well on unknown, but labeled, animal data. - We should be able to save a model after training